Over the years, artificial intelligence (AI) has become an integral part of smartphone applications. From computational photography to on-device language models, AI is now powering many functions on our smartphones. However, AI requires significant computational resources, which CPUs are not optimally designed for. This is where GPUs have stepped in to meet the demands of AI processing in smartphones. But as AI applications grew more complex, GPUs faced limitations in keeping up with the size and complexity of neural networks. To overcome this, the industry turned to specialised AI hardware, specifically Neural Processing Units (NPUs).

Initially, GPUs were primarily used to manipulate and alter memory to display images and animate graphics on smartphones. As AI applications became more common, GPUs found a new purpose in running the complex mathematical models required for AI. They significantly reduced processing times for functions like facial recognition, object detection, and speech recognition, working in conjunction with CPUs. However, as neural networks grew larger and more complex, GPUs couldn’t keep up. Their general-purpose architecture did not align with the specific needs of AI processing, and the power consumption constraints of smartphones further compounded the issue.

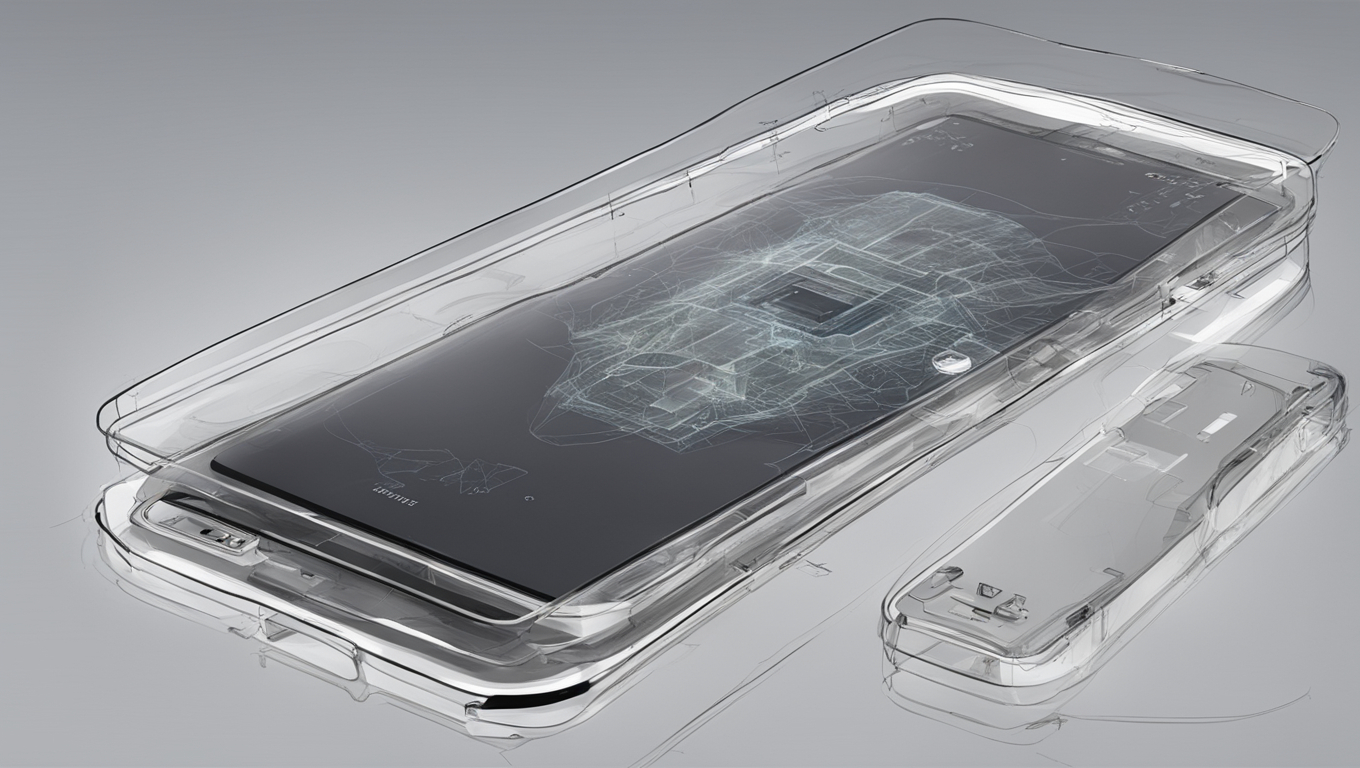

This is where NPUs come into play. NPUs are microprocessors specifically designed for neural network machine learning. They excel at processing massive amounts of multimedia data, such as video and images, using a “data-driven parallel computing” architecture. Unlike GPUs, which simulate human neurons and synapses at the circuit level, NPUs directly process them with a deep-learning instruction set. This allows NPUs to efficiently complete the processing of a group of neurons with a single instruction, making them highly efficient for AI computations. NPUs also offload the heavy lifting of AI processing from the main chip, allowing it to work smoothly and saving battery life.

NPUs have gained prominence in smartphones, with major vendors incorporating them into their devices. Apple introduced the Neural Engine NPU in its A11 mobile chipset and higher-end iPhone models in 2017. Huawei followed suit in 2018 with its Kirin 970 system on a chip. Qualcomm, the dominant Android mobile platform vendor, integrated its AI Engine into its premium 800 series chipsets. MediaTek and Samsung have also embraced NPUs in their latest offerings. NPUs offer a more specialised and efficient solution for AI processing in smartphones, catering to the increasing demand for AI capabilities.

While NPUs are designed specifically for neural network tasks, GPUs remain more versatile and can handle a wider range of parallel computing tasks. CPUs, on the other hand, have fewer, more powerful cores. In terms of core count, NPUs can vary greatly depending on the specific model and manufacturer. However, the trend generally shows CPUs with fewer cores, GPUs with many cores optimised for parallel tasks, and NPUs with cores specialised for AI computations.

The rise of NPUs in smartphones signifies a significant advancement in AI processing capabilities. With their dedicated focus on AI computations and efficient circuitry, NPUs not only deliver lightning-fast results but also contribute to longer battery life. As AI continues to play a crucial role in smartphone applications, NPUs are poised to become even more prevalent in future devices, offering improved performance and efficiency.

Use the share button below if you liked it.