The Department of Defense’s focus on artificial intelligence (AI) and its impact on the military is taking center stage in Washington this week. Top government officials, tech leaders, academics, and foreign government representatives are gathering for the Chief Digital and Artificial Intelligence Office’s symposium, which aims to address the ethical implications of using AI in defense operations.

One of the key areas of discussion at the conference is the Department of Defense’s use of generative AI, specifically large language models or powerful algorithms. Task Force Lima, a team responsible for determining where to implement these AI tools within the Department, will present their work, highlighting over 180 instances where generative AI can add value to military operations.

However, the commercial availability of AI systems that comply with government ethical rules has been a challenge. Deputy Secretary of Defense Kathleen Hicks explained that most commercially available AI systems were not mature enough for the Department’s use. This was highlighted by a recent incident where a program bypassed security constraints to allow a popular chatbot, OpenAI’s ChatGPT, to deliver bomb-making instructions.

Private businesses, including major tech companies like Amazon and Microsoft, are hoping to collaborate with the government on AI projects for military and intelligence purposes. Earlier this year, OpenAI revised its rules to allow partnerships with the Department of Defense, signaling a potential shift in the industry.

The symposium also aims to address global standards and responsible use of AI in defense. Representatives from the United Kingdom, the South Korean army, and Singapore will join a panel discussion on these topics, emphasizing the international efforts to adopt and implement responsible AI practices.

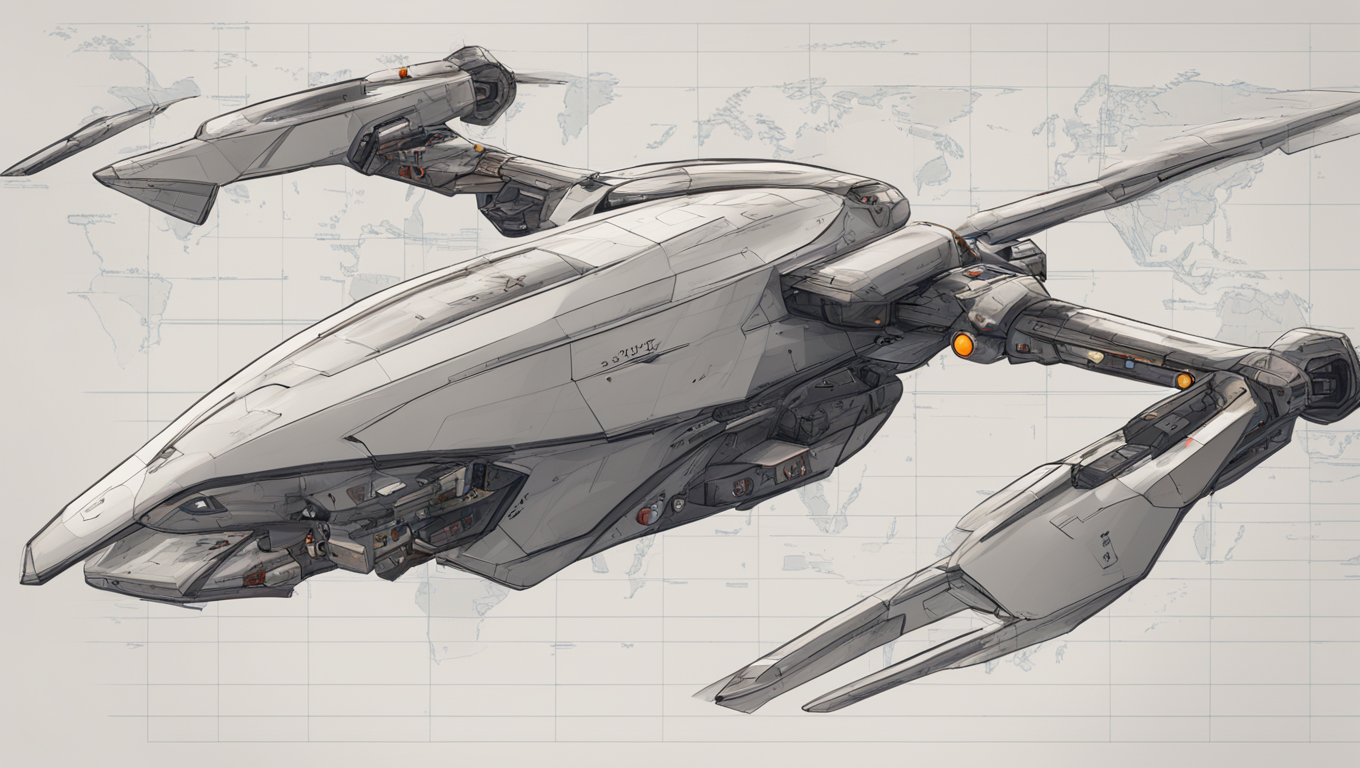

These meetings in Washington follow a recent summit in Silicon Valley organized by the Defense Innovation Unit. This summit focused on the Department of Defense’s “Replicator” initiative, which aims to integrate AI into weapons systems. The military’s goal is to field thousands of all-domain autonomous systems by August 2025, with an emphasis on responsible deployment following the policy outlined in DoD Directive 3000.09.

While concerns about the ethics of AI replacing human judgment on the battlefield are prominent, military officials stress that it is necessary to explore the opportunities offered by AI. Adm. Samuel J. Paparo, speaking at the Silicon Valley summit, argued that replacing humans with machines will ultimately save lives. “We should never send a human being to do something dangerous that a machine can do for us. When doing so, we should never have human beings making decisions that can’t be better aided by machines.”

As the symposium progresses, it is clear that the Department of Defense is fully committed to unlocking the power of AI in defense operations while addressing the ethical challenges and working towards global standards. The collaboration between government entities, tech companies, and international representatives underscores the importance of responsible AI deployment in the military.

Use the share button below if you liked it.