Artificial intelligence experts and industry executives are joining forces to advocate for stricter regulation surrounding the creation of deepfakes. In an open letter titled “Disrupting the Deepfake Supply Chain,” the group highlights the potential risks to society and calls for safeguards to be put in place.

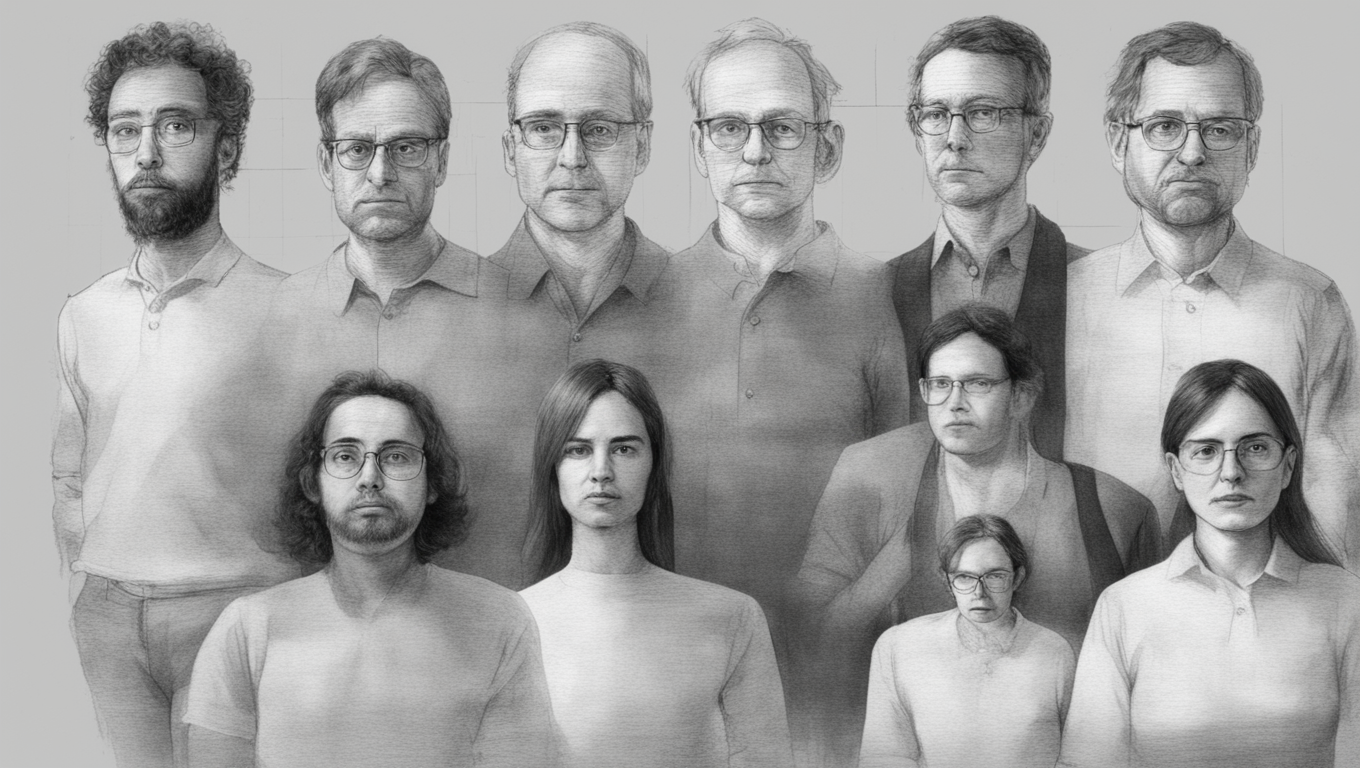

Deepfakes are realistic yet fabricated images, audios, and videos created by AI algorithms. Recent advancements in technology have made it increasingly difficult to distinguish between deepfakes and human-created content. This has raised concerns about the potential for deepfakes to be used for purposes such as sexual imagery, fraud, or political disinformation.

According to Yoshua Bengio, one of the trailblazers in the field of AI, “Safeguards are needed because AI is progressing rapidly and making deepfakes much easier to create.” Bengio is just one of over 400 individuals from various industries, including academia, entertainment, and politics, who have signed the open letter.

Andrew Critch, an AI researcher at UC Berkeley, spearheaded the effort to gather support for the regulations outlined in the letter. The recommendations include criminalizing deepfake child pornography, imposing criminal penalties for individuals involved in the creation or spread of harmful deepfakes, and requiring AI companies to develop measures to prevent their products from creating harmful deepfakes.

The urgency for regulation in the field of deepfakes stems from the accelerating progress of AI technology. In late 2022, OpenAI unveiled ChatGPT, an AI model that stunned users with its ability to engage in human-like conversation and perform various tasks. This achievement raised concerns among regulators, leading to a call for a six-month pause in the development of AI systems more powerful than ChatGPT.

Steven Pinker, a Harvard psychology professor, and Joy Buolamwini, founder of the Algorithmic Justice League, are among the signatories of the letter. Additionally, two former Estonian presidents, researchers from Google DeepMind, and a researcher from OpenAI have also lent their support.

As society becomes increasingly reliant on AI technology, the focus on ensuring that these systems do not harm society has become paramount. The call for regulation surrounding deepfakes is an important step in addressing the potential risks associated with their misuse.

With the backing of prominent figures in the AI field and beyond, the open letter serves as a wake-up call to both policymakers and AI companies. The regulation of deepfakes is a pressing matter, and measures must be taken to protect society from the harmful effects of this technology. Only through collaborative efforts can we prevent the misuse of AI and safeguard the integrity of our increasingly digital world.

Use the share button below if you liked it.