Meet Alter3: The Humanoid Robot Learning Language and Emotions

Picture a world where you have a robot companion who can take selfies, play air guitar, and even eat popcorn. Well, that reality may not be too far off. Researchers at the University of Tokyo have developed Alter3, a humanoid robot that can carry out these activities and more, thanks to the latest breakthrough in artificial intelligence.

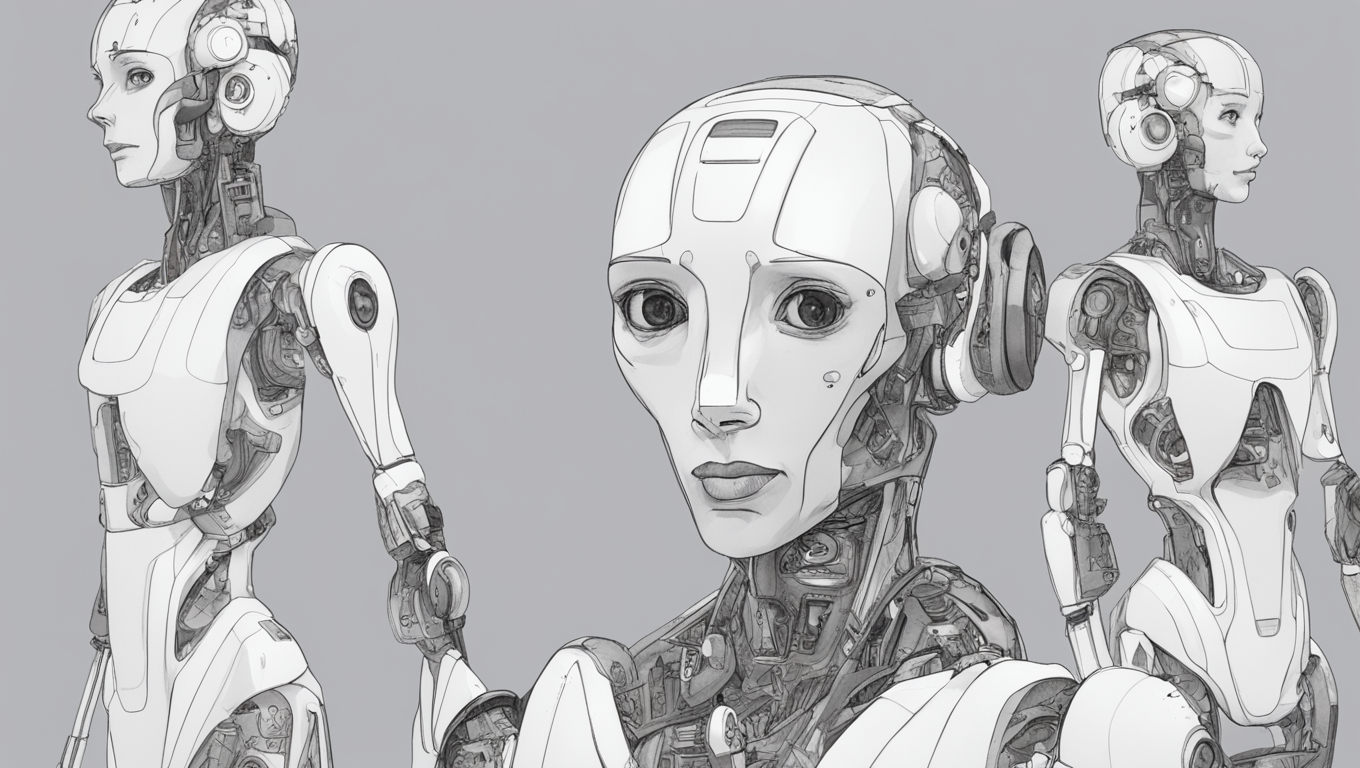

Alter3 was first introduced in 2016 as a platform for exploring the concept of life in artificial systems. It has a realistic appearance and is equipped with 43 axes controlled by air actuators, allowing it to move its upper body, head, and facial muscles. The robot also has cameras in its eyes that enable it to see and interact with humans and its environment.

What sets Alter3 apart is its ability to utilize GPT-4, the most advanced large language model (LLM) in the world. GPT-4 is a deep learning model that can generate natural language texts from any given prompt. Instead of programming each action for the robot, researchers can now simply give Alter3 verbal instructions, and GPT-4 will generate the corresponding Python code that controls its movements and behaviors.

For example, to make Alter3 take a selfie, researchers can provide instructions like, “Create a big, joyful smile and widen your eyes to show excitement. Swiftly turn the upper body slightly to the left, adopting a dynamic posture. Raise the right hand high, simulating a phone. Flex the right elbow, bringing the phone closer to the face. Tilt the head slightly to the right, giving a playful vibe.” GPT-4 will then produce the code necessary to make Alter3 execute those precise movements.

The researchers have tested Alter3’s capabilities with GPT-4 in various scenarios, including tossing a ball, eating popcorn, and playing air guitar. They have also explored different types of feedback, such as linguistic, visual, and emotional, to enhance the robot’s performance and adaptability.

One fascinating aspect of Alter3’s behavior is its ability to learn from its own memory and human responses. If the robot does something that elicits laughter or a smile from a human, it will remember that and attempt to recreate it in the future, much like how newborn babies imitate their parents' expressions and gestures.

The addition of humor and personality to Alter3’s actions makes the robot even more engaging. In one instance, the robot pretends to eat a bag of popcorn, only to realize that it belongs to the person sitting next to it. It then displays a surprised and embarrassed expression and apologizes with its arms.

The Alter3 research team believes that this fusion of natural language processing and robotics represents a breakthrough in the field of artificial intelligence. It demonstrates how large language models can bridge the gap between natural language and robot control, paving the way for improved human-robot collaboration and communication. Moreover, it opens up possibilities for the development of more intelligent, adaptable, and personable robotic entities.

The researchers' paper, titled “From Text to Motion: Grounding GPT-4 in a Humanoid Robot ‘Alter3’,” has been made available on the preprint server arXiv. Their hope is that their work will inspire further research and development in this direction, eventually leading to the creation of robot companions that can understand and share our interests and emotions.

Alter3 showcases the potential of combining natural language processing and robotics to create incredible human-like interactions. Through the use of GPT-4, the robot can perform a wide range of tasks and behaviors based on verbal commands, without extensive programming or manual control. With the ability to learn and express humor and personality, Alter3 represents a significant advancement in the field of robotics and artificial intelligence. Who knows, in the not-too-distant future, we may all have robot friends that can truly connect with us.

Use the share button below if you liked it.