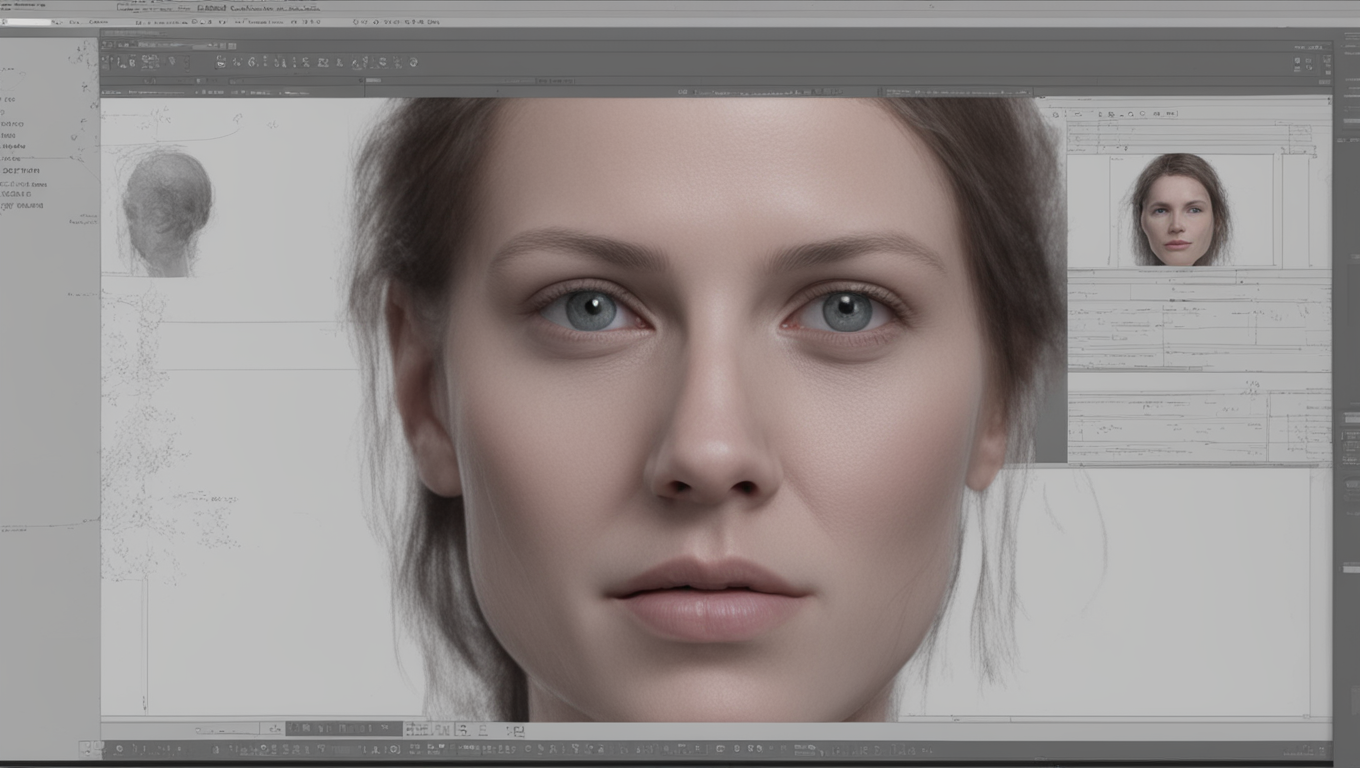

The rise of deepfakes, computer-generated videos that can impersonate anyone, is becoming a growing concern for law enforcement and lawmakers around the world. These AI-generated videos have the potential to manipulate and harm individuals, leading to reputational damage and even criminal activities. The technology has advanced to the point where it can replicate not only someone’s voice but also their face, making it difficult for people to distinguish between what is real and what is fake.

One recent example of the damage caused by deepfakes is the case of New Hampshire voters receiving robocalls from a computer-generated imitation of President Biden, discouraging them from voting in the January primary. The mastermind behind the calls now faces felony charges and a proposed FCC fine. This incident highlights the challenges that law enforcement faces in catching up with this cutting-edge technology.

Acting U.S. Attorney for Massachusetts, Joshua Levy, expressed his concern about the impact of deepfakes on the justice system. He spoke about the technology’s ability to introduce doubt into time-tested forensic evidence, such as audiotape and videotape, which are crucial in prosecutor cases. Convincing a jury of someone’s guilt beyond a reasonable doubt becomes more challenging when AI can create doubts that are difficult to refute.

Recognizing the need to regulate this technology, President Biden signed an executive order in October to govern artificial intelligence. The order directed the Department of Commerce to develop a labeling system for AI-generated content, aiming to protect Americans from AI-enabled fraud and deception. It also called for research funding to strengthen privacy protections in the field.

The U.S. Department of Justice appointed its first Artificial Intelligence Officer in February to lead their efforts in understanding and navigating these emerging technologies. This move reflects the department’s commitment to keep pace with scientific and technological developments to uphold the rule of law and protect civil rights.

Other countries, such as the European Union, have also taken steps to regulate AI. In March, the EU passed the AI Act, a comprehensive framework to govern the use of artificial intelligence. The act aims to ensure that humans are in control of the technology and protect individuals from potential harms.

At the state level in the U.S., several states have taken matters into their own hands to regulate AI in the absence of federal legislation. Massachusetts, for example, issued an advisory to guide the development and use of AI products within existing regulatory and legal frameworks. The state acknowledges the potential benefits of AI but emphasizes the need to mitigate the risks, such as bias and lack of transparency.

California, home to many tech giants, is also striving to lead in AI regulation. Governor Gavin Newsom expressed his desire for the state to dominate the AI space while ensuring that regulations strike the right balance that doesn’t deter tech firms. California’s approach is focused on leveraging AI tools to address social issues like homelessness.

The risks associated with deepfakes are extensive. Manipulation of videos can be used to spread false information, exploit individuals, and sabotage reputations. Deepfakes have the potential to undermine democracy and national security by creating convincing fake videos of public officials engaging in illegal activities or discussing sensitive topics.

Another concerning aspect is the use of deepfakes in pornography. Machine-learning technologies allow for the creation of realistic-looking videos where people’s faces are inserted into explicit content. This hijacks individuals' sexual and intimate identities and violates their privacy. Traditional privacy laws are ill-equipped to address these kinds of invasions, raising legal challenges in combating this form of exploitation.

The fight against deepfakes requires a multi-faceted approach, involving technological advancements, legal regulations, and public awareness. As the technology continues to advance, it becomes imperative for societies to stay vigilant and proactive in protecting individuals from the potential harms of deepfakes. Without appropriate safeguards, deepfakes have the potential to erode trust, destabilize institutions, and compromise individuals' lives.

Use the share button below if you liked it.