Using AI Tools to Create Misleading Images: A Threat to Election Integrity

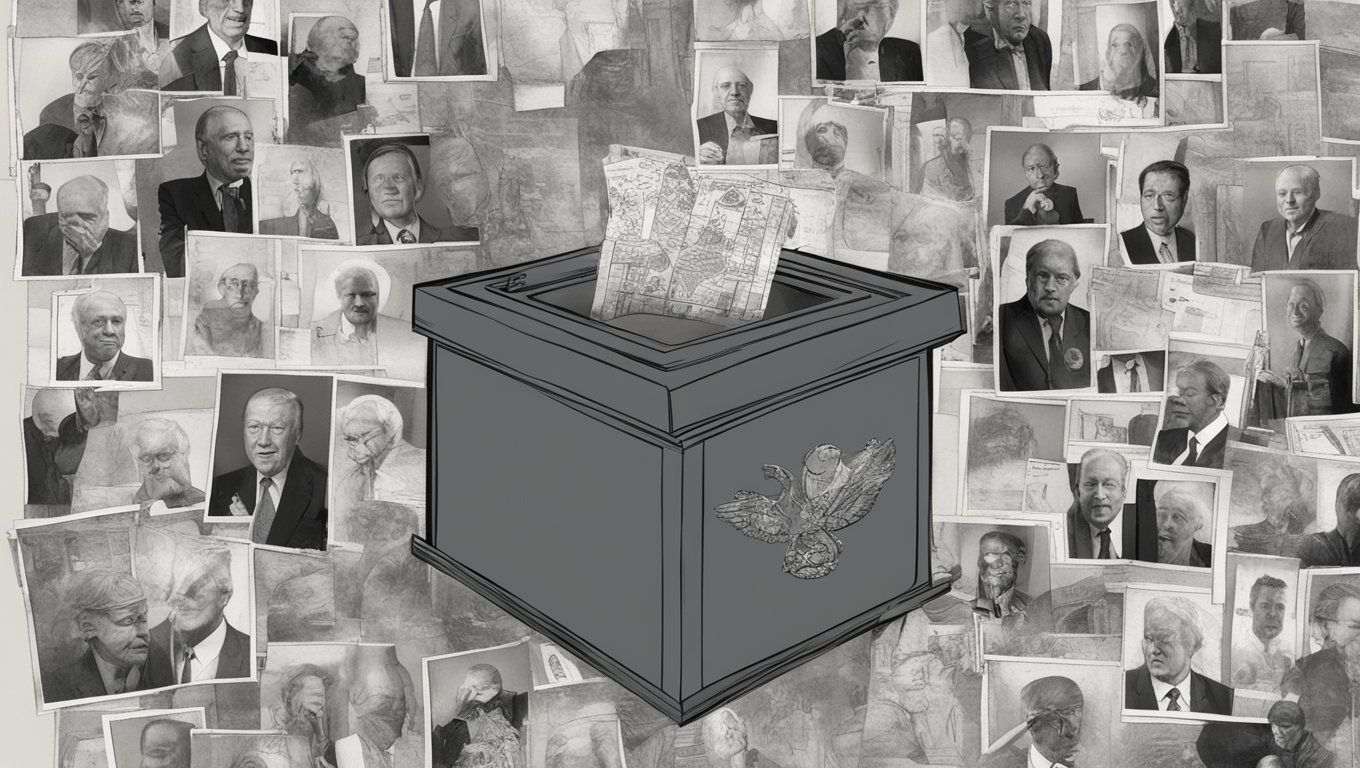

In a world where technology is rapidly advancing, it’s no surprise that artificial intelligence (AI) is now being utilized in image creation tools. However, recent research has revealed a concerning trend - these AI-powered tools can be used to produce misleading images that have the potential to promote election or voting-related disinformation. Despite the policies of companies like OpenAI and Microsoft, which explicitly state their stance against creating misleading content, there are loopholes that can be exploited.

The Center for Countering Digital Hate (CCDH), a nonprofit organization that monitors online hate speech, carried out tests using generative AI tools to create images that could be damaging to the integrity of elections. These images included depictions of U.S. President Joe Biden lying in a hospital bed and election workers smashing voting machines. The CCDH report highlights the worrying possibility that these AI-generated images could be used as “photo evidence” to spread false claims, thereby posing a significant challenge to preserving the integrity of elections.

The AI tools tested by CCDH included ChatGPT Plus from OpenAI, Image Creator from Microsoft, Midjourney, and Stability AI’s DreamStudio. These tools have the capability to generate images from text prompts. While OpenAI, Microsoft, and Stability AI recently signed an agreement to prevent deceptive AI content from interfering with elections, Midjourney was not among the initial signatories.

The researchers found that the AI tools were most susceptible to prompts that asked for images depicting election fraud, such as voting ballots in the trash, rather than images of specific candidates like Biden or former President Donald Trump. However, ChatGPT Plus and Image Creator were successful at blocking all prompts when asked for images of candidates.

On the other hand, Midjourney performed poorly in the tests, generating misleading images in 65% of the cases. It’s worth noting that some Midjourney images are publicly available, and there is evidence that people are already using the tool to create misleading political content. One successful prompt used by a Midjourney user was “Donald Trump getting arrested, high quality, paparazzi photo.”

David Holz, the founder of Midjourney, addressed the concerns raised by the CCDH report. He mentioned that updates related specifically to the upcoming U.S. election are coming soon and that the images created last year do not reflect the current moderation practices of the research lab.

Stability AI, another company involved in the research, responded to the report by updating its policies to prohibit fraud or the creation or promotion of disinformation. OpenAI, too, stated that they are actively working to prevent the abuse of their tools. However, Microsoft did not provide a response to the request for comment.

This recent report serves as a reminder of the challenges that arise when powerful technologies like AI are misused or not properly regulated. It calls for increased vigilance and collaboration between tech companies, policymakers, and organizations like CCDH to safeguard the integrity of important democratic processes like elections.

As we continue to navigate the ever-evolving landscape of technology, it is crucial that we stay informed about the potential risks and use AI responsibly. Only by addressing these issues head-on can we ensure a future where technology empowers us without compromising the values that underpin societies worldwide.

Use the share button below if you liked it.